Building a Real-time Scoring System for the NPGL

This Tuesday in Madison Square Garden, the National Pro Grid League will have its first regular season match. Three months ago, we started building the real-time scoring system that backs its events. Here’s how we did it:

Madison Square Garden

Madison Square Garden

The Task at Hand

The National Pro Grid League is a new, professional spectator sport with athletes competing in human-performance racing. Matches in the NPGL are made up of 11 individual races, in which two teams compete head-to-head in a variety of strength and bodyweight exercises.

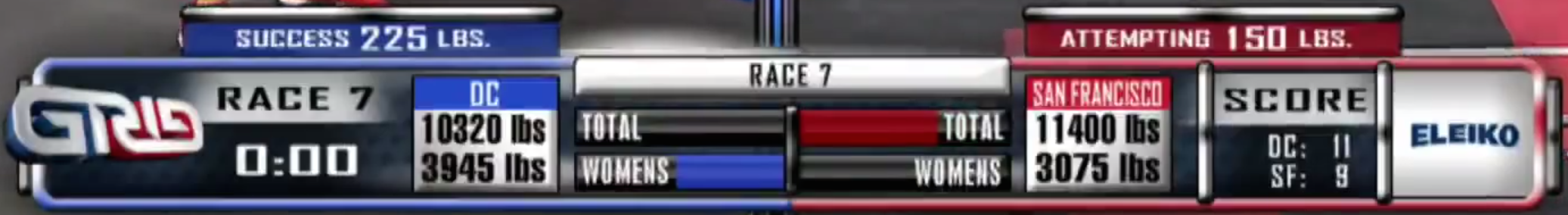

As the races progress, a live display shows each team’s progress through the race – for example, the size of their lead over the opposing team, or each athlete’s completed and remaining rep counts. This information is also embedded in a live stream of the event, currently broadcasted on YouTube, but soon to be on NBC Sports. Our task was to build the system that collects the data during these races and makes it available to the graphics being displayed on the Jumbotron and TV.

Live TV Graphics

Live TV Graphics

High-Stakes in Sin City

The first test of our system came at NPGL’s Vegas Combine, the culmination of the summer tryouts for the sport. Two hundred of the top athletes from around the country had gathered at the Orleans Arena for three days of non-stop matches, with the final team standing guaranteed a spot in the league.

We knew that building reliability into our system was important, but only fully realized the extent in Vegas. The final few matches were all streamed live, and a full television crew was on site for the job. Stressful hardly begins to describe the environment inside a production truck. It’s a controlled chaos where everyone has to be working together, and live TV doesn’t leave much margin for error. Needless to say, we didn’t want to be the ones to ruin the show!

Inside the TV Truck

Inside the TV Truck

Pulling the Plug

Although we had internet connectivity available, we didn’t want to rely on it during an event – we knew that it opened up a number of problems that could ruin our day. Additionally, keeping everything local would eliminate some concerns we had about latency during the race. We brought our server with us and wired everything up on a local network.

Since the matches can also be followed online, our local server periodically “phones home”. We do so out-of-band from the rest of the system, so that a network outage isn’t catastrophic.

Our Aleutia server

Moving to the Browser

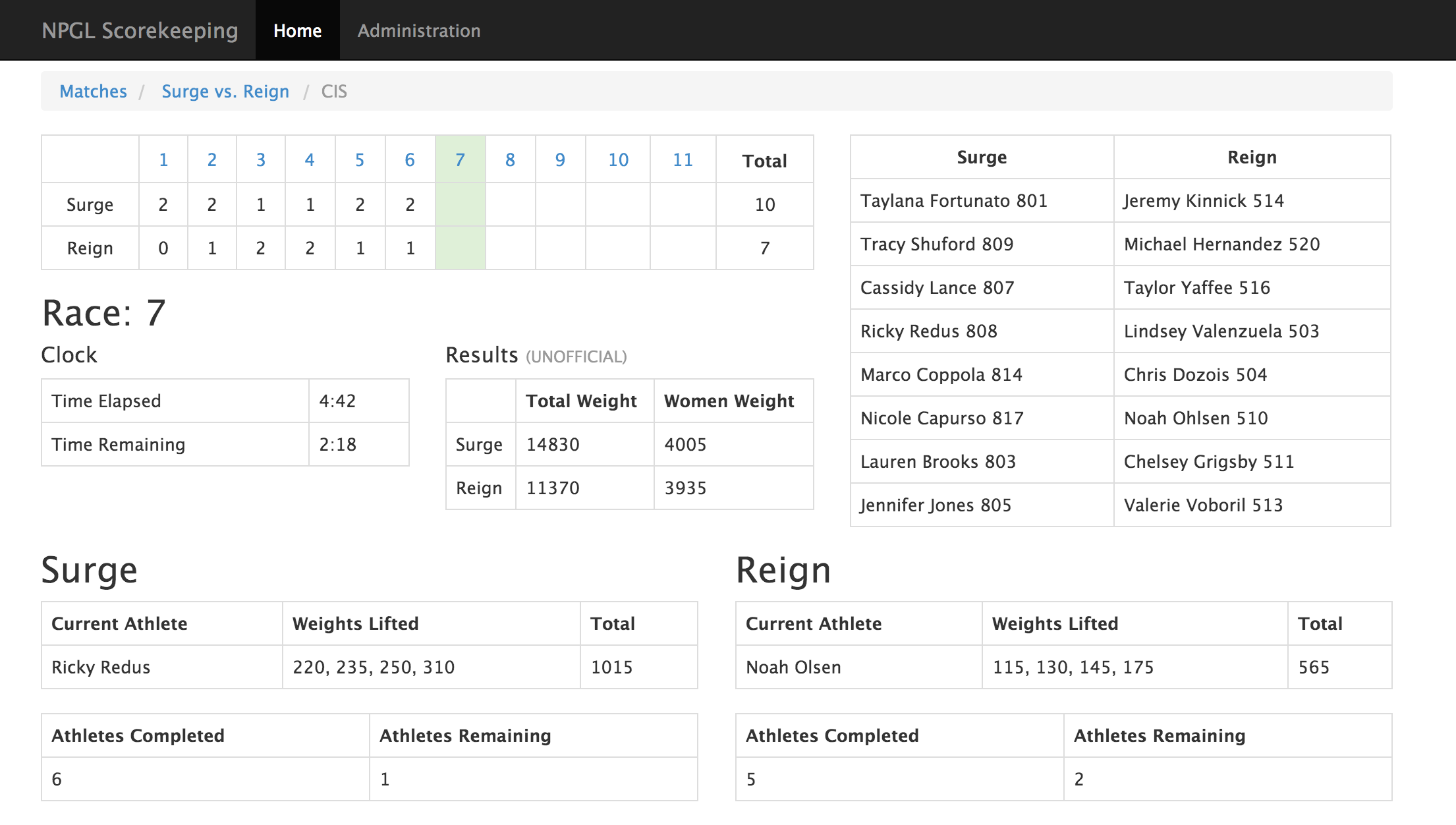

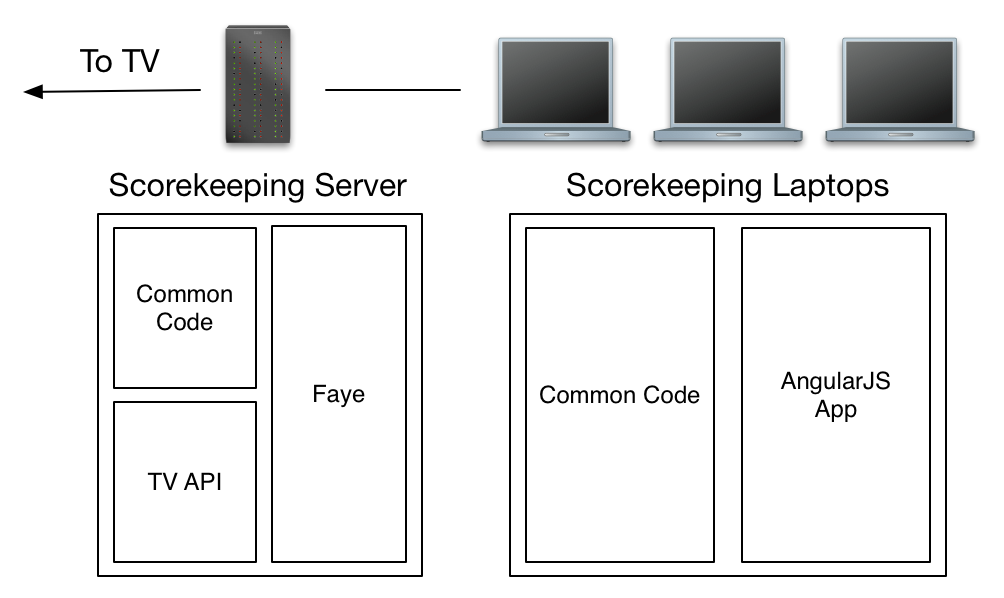

We needed a highly-responsive application for our scorekeepers to report events in as close to real time as possible. We chose AngularJS and found its two-way data-binding invaluable – we could simply keep the race’s data on scope, and all updates were handled for us by the framework. Since we weren’t concerned about misbehaving clients, all the business rules of the races (for example, when are athletes allowed to advance) could be implemented on the client-side.

We still had a small server component – providing persistence and the TV endpoint – so we used node.js for our backend and shared code with browserify. Since we could run the same codebase on client and server, we didn’t have to worry about duplication or deviation in logic.

The Commentator Information Screen, mid-race

The Commentator Information Screen, mid-race

Dealing with Race Conditions

The races are fast-paced, so multiple scorekeepers are necessary to keep track of what’s going on. We needed a way to ensure the state of each browser stayed in sync, and to handle concurrent, possibly conflicting messages between browsers.

For messaging between the browsers, we used faye. During a race, all connected clients are listening for messages on a shared channel. When a scorekeeper reports an event (for example, an athlete completes a lift), a message is published on that channel. Browser state is only updated upon message receipt, even for the browser that originated the message.

Before updating, browsers then have the opportunity to guard against conflicting messages. Since the browsers start in the same state, run the same codebase, and receive messages in a the same order, they reach the same result after processing the message. In this way, we keep them in sync without having to communicate back to the server.

Some Bumps and Bruises

Along the way, we learned quite a bit:

- Websockets are cheap, but not free. In the beginning, we were sending much larger amounts of data over websockets and at more frequent intervals. We started to see response times slow down at an early trial run after large amounts of data had accumulated. It was so easy for us to send data over websockets that we didn’t pay enough attention to the amount we were sending.

- When sharing code between client and server, be wary of any inconsistencies between the two. For the most part, our client and server ran the same codebase. However, the browser persisted its data in memory, while the server used a database as its store. Since server-side data access was asynchronous while client-side was not, we eventually ran into sporadic inconsistencies between them – which were difficult to track down.

- Software isn’t the only point of failure. We had planned for both client and server failure as best as we could. Imagine our dismay when we saw the data-feed to the Jumbotron break, and realized that a network cable had failed!

Looking on from the scorekeeping table in Las Vegas

Looking on from the scorekeeping table in Las Vegas

Just the Beginning…

Although we’ve come a long way in just a few months, there’s still a lot to do. Manual scorekeeping isn’t as accurate as we’d like, so eventually we plan on using hardware to capture race data. As the events get bigger and bigger, the cost of failure is becoming higher – so we’re exploring how technologies like node-webkit or WebRTC might eliminate a server component from the critical path. And as the NPGL takes their matches on the road for their regular season, we’d like to automate system setup and teardown. Season 2 is just around the corner!