Crank Your Specs

One of the downsides to test driven development (TDD) on a large project is that over time your suite of tests will ultimately slow down. Whether it’s stale code, bloated tests, or just an increasing number of features to support, the more tests you write, the more time you will spend watching them run. Imagine how many times the oldest test you wrote gets run over the life of the project. Thousands? Tens of thousands?

At the start of a project, when all is ponies and rainbows, the cost of running all your tests is marginal. But after 6 months, 20 ActiveRecord classes, 15,000 lines of code, and 2,000 specs, you might be staring across the chasm of a 5 minute test suite. 5 minutes before you can check in a code change. 5 minutes before your feature passes continuous integration and hits the deployment server. Ugh.

Round 1

A little background first — on this project we are running rails 3.0.3 on ruby 1.9.2 and testing with rspec and factorygirl. After reading the road to faster tests and armed with perftools.rb, I decided to do some investigating.

Finished in 248.22 seconds

1916 examples, 0 failures

Total: 27519 samples

9565 34.8% 34.8% 9565 34.8% garbage_collector

3281 11.9% 46.7% 3314 12.0% Mysql2::Client#query

1055 3.8% 50.5% 1058 3.8% Module#remove_method

1001 3.6% 54.2% 1500 5.5% Kernel#require

347 1.3% 55.4% 347 1.3% IO#write

Garbage collection is taking up 35% of the test time! Wow. Here’s the REPL that I used to query the ObjectSpace (in spec/spec_helper.rb):

config.after(:suite) do

while true

'> '.display

begin

puts eval($stdin.gets)

rescue Exception => e

puts e.message

end

end

end

And the results:

>@count = 0; ObjectSpace.each_object(ActiveRecord::Base) { @count += 1 }

16932

>ObjectSpace.count_objects

{:TOTAL=>2215274, :FREE=>477896, :T_OBJECT=>103363, :T_CLASS=>20276, :T_MODULE=>1550, :T_FLOAT=>3602, :T_STRING=>1078471, :T_REGEXP=>3295, :T_ARRAY=>171818, :T_HASH=>170042, :T_STRUCT=>12041, :T_BIGNUM=>167, :T_FILE=>6, :T_DATA=>122410, :T_MATCH=>497, :T_COMPLEX=>1, :T_RATIONAL=>3161, :T_NODE=>33802, :T_ICLASS=>12876}

17,000 ActiveRecord objects and 1.7 million total objects in use. Looks like rspec is holding onto instance variables and leaking memory just like Test::Unit. No wonder garbage collection is a dog. So I went ahead and applied the fix from Jamis like this:

RSpec.configure do |config|

...

RESERVED_IVARS = %w(@loaded_fixtures)

last_gc_run = Time.now

config.before(:each) do

GC.disable

end

config.after(:each) do

(instance_variables - RESERVED_IVARS).each do |ivar|

instance_variable_set(ivar, nil)

end

if Time.now - last_gc_run > 1.0

GC.enable

GC.start

last_gc_run = Time.now

end

end

end

Finished in 145.54 seconds 1916 examples, 0 failures

After releasing instance variables and triggering garbage collection every second, we just gained 100 seconds (40%!?!) of our developer lives back. Hell yeah.

Round 2

Let’s profile it again and see if anything else jumps out:

Total: 17445 samples

2673 15.3% 15.3% 2673 15.3% garbage_collector

1971 11.3% 26.6% 1984 11.4% Mysql2::Client#query

962 5.5% 32.1% 1452 8.3% Kernel#require

950 5.4% 37.6% 953 5.5% Module#remove_method

336 1.9% 39.5% 1000 5.7% ActiveRecord::AttributeMethods::Read#read_attribute

Mysql2::Client#query is the next biggest contributor. For a while I have been wary of the way factorygirl automatically builds associations for you. For example in a typical blog app a Factory(:comment) will create a comment, its author, its post, its post’s author, etc, in order to build up a valid object graph. It would be nice if we could avoid taking this hit for every test.

The solution is to leverage the speed of fixtures with the ease and explicitness of factories. Instead of building a new object for the association, we replace it with User.first, Comment.first, Post.first, Blog.first, etc and rely on a single object defined in the fixture. This results in a single query to the database. We are basically using transactional fixtures as singletons which are linked together in a valid graph by default and get reset after every test run.

Factory.define(:comment) do |f|

f.body "This is my first comment"

f.user { User.first }

f.post { Post.first }

end

The knock against fixtures has always been that they are difficult to maintain since they don’t live in code. And they introduce dependencies between unrelated tests which should be running in isolation. Having one valid object per YAML fixture file seems like a perfectly good trade off and none of the downside. The results:

Finished in 103.22 seconds

1916 examples, 0 failures

Total: 13290 samples

1881 14.2% 14.2% 1881 14.2% garbage_collector

1290 9.7% 23.9% 1307 9.8% Mysql2::Client#query

1019 7.7% 31.5% 1021 7.7% Module#remove_method

973 7.3% 38.8% 1446 10.9% Kernel#require

304 2.3% 41.1% 304 2.3% Module#to_s

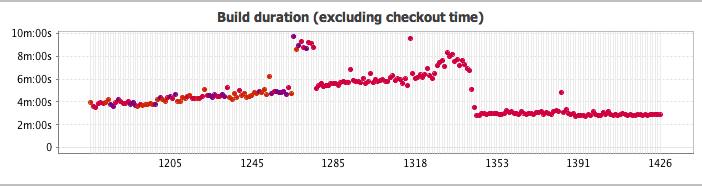

Another 40 seconds of our developer lives back. Here are the stats from our continuous integration server (TeamCity):

Conclusion

With a little bit of research and some minor enhancements, we were able to cut our test time by more than half. The graph from TeamCity shows a remarkably consistent test suite since the change. As you can see the trend was not tenable. Take the time to investigate why your tests are running slowly. The amount of time and suffering that you save your team will be worth it. After all, what would TDD be without an efficient red-green-refactor cycle?

Further Reading

Ben Lindsey

Runner; Chef; Trancer; Traveler; Technologist; Agile evangelist. I'm a firm believer in that which you measure will surely improve. Currently I am working on how to test drive and scale a node.js application in the cloud.