Using HAProxy with Socket.io and SSL

Donning my ops hat a bit over the last few months, I have learned a bit about HAProxy, Node.js, and Socket.io. I was pretty surprised by how little definitive information there was on what I was trying to do for one of our projects, and HAProxy can be pretty intimidating the first time around.

What and Why

- Route all traffic through a load-balancing proxy in preparation for horizontal scale and splitting of services (i.e. X and Y axes on the AKF Scale Cube).

- Support Socket.io’s websocket and flashsocket transports. Our application sends/receives many events to/from its clients and requires low latency for a great user experience; persistent sockets help make that happen. Sadly, IE (<10) only supports Flash sockets.

- Use TLS/SSL for all traffic for security and to help push through finicky internet infrastructure. It’s surprising how many organizations have firewalls that disallow socket traffic. SSL traffic is often allowed through.

- Terminate SSL at the proxy so that we don’t have to deal with certs and whatnot in the application.

- Redirect all HTTP traffic to HTTPS

To make all of this happen, I cobbled together information from a few posts and dug into the documentation to fill in the gaps. We’ve been using our HAProxy configuration for a couple of months now and it’s working well.

HAProxy

If you’re not familiar with HAProxy, it’s a light-weight, high-performance software TCP/HTTP proxy/load-balancer. It’s been around for a long time and it well regarded as one of the best in any class. GitHub, Twitter, Tumblr, Instagram, 37 Signals, and many other services use it. It is quite feature rich for such a small program (~400k).

SSL support is new to HAProxy and is only available in the dev builds, the latest of which is v1.5-dev18. This is awesome because we can eliminate the use of stunnel (or Nginx) for SSL termination, keeping the overall architecture about as simple as possible. 1.5.x is a development line and might not be stable. In my experience, it’s been solid for the configuration we’re using.

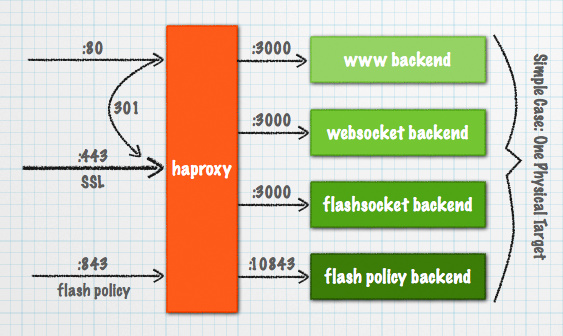

Overview

The Goods

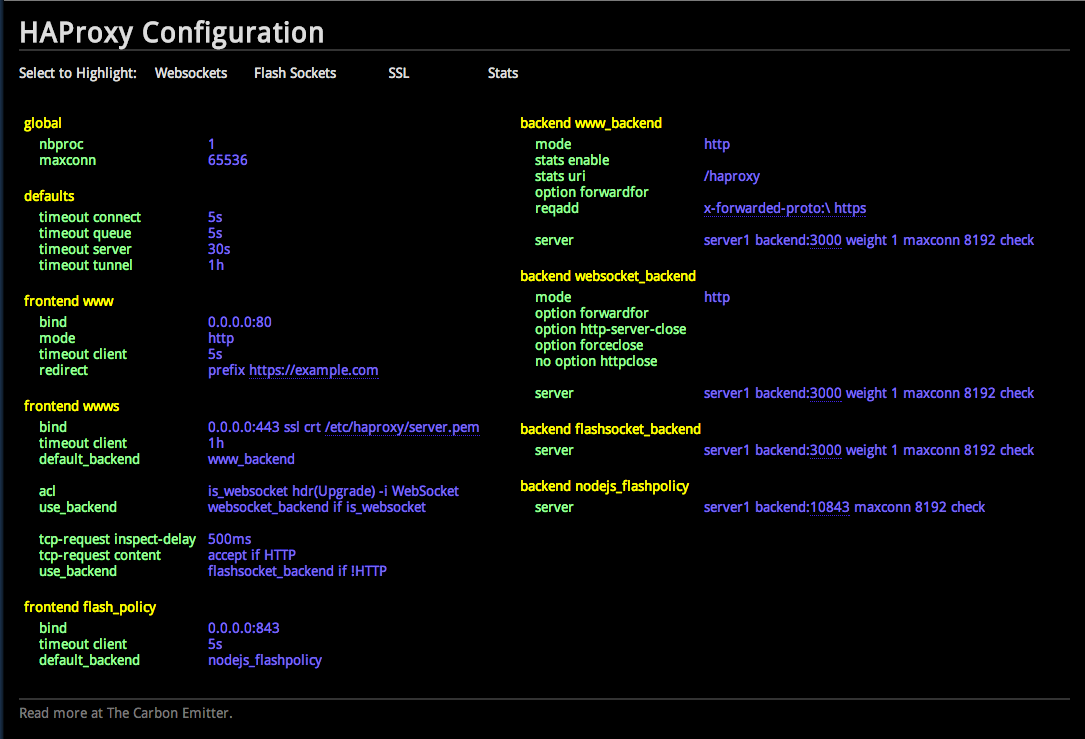

Rather than embedding a configuration file in this post, I created an interactive configuration page for you to check out and explore. The directives are links to HAProxy documentation, the interesting settings are annotated, and you can highlight the blocks of configuration that support our above goals.

Here’s a gist of the same configuration if you’re looking for something a little more raw.

Considerations

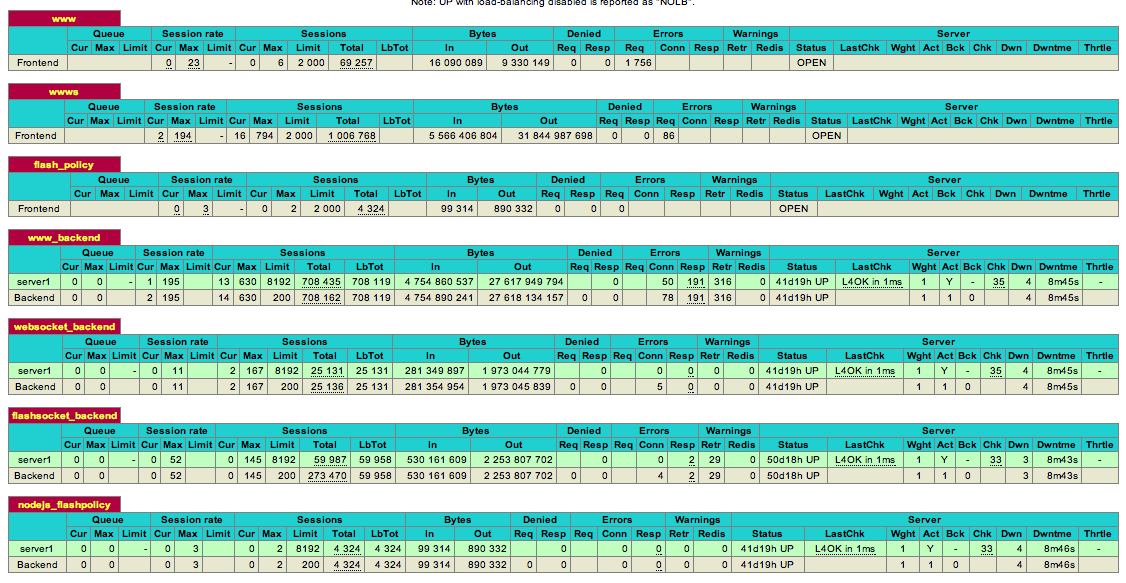

You might ask why we would split HTTP, Web Socket, and Flash Socket traffic only to send it to the same backend? The immediate benefit is that we can track stats for each backend:

It also sets us up for eventual routing to different backends (i.e Y axis). For example, it’s considered a best practice to route Socket.io traffic to a dedicated cluster of backends, separate from handling regular web traffic.

This configuration doesn’t include support for load balancing/fail-over. When I have more experience, I’ll write about it. It’s an interesting topic and is complicated by the use of Socket.io.

Why not use Nginx? When we started, Nginx did not support proxying websockets. Support has since been added but I haven’t tried it (though one of our clients did and had issues getting it working). Also, it might be tricky pushing flash socket traffic through since it’s raw TCP (websocket traffic looks like HTTP).

Check it out and let me know if you have any tweaks or tips of your own.

Dzień dobry,

Christian

References

- HAProxy Documentation

- Nginx, Websockets, SSL and Socket.IO deployment on Mixu’s tech blog

- How to get SSL with HAProxy getting rid of stunnel, stud, nginx or pound on the Exceliance Blog

Christian Nelson

Christian is a software developer, technical lead and agile coach. He's passionate about helping teams find creative ways to make work fun and productive. He's a partner at Carbon Five and serves as the Director of Engineering in the San Francisco office. When not slinging code or playing agile games, you can find him trekking in the Sierras and playing with his daughters.