How to save 90% of your development budget

Carbon Five was recently brought in to build a new product with a planned budget of 6 months. As the first step, we conducted a few rounds of customer development to try and validate the concept. After a month of experiments by a product manager and designer, we ultimately recommended that the company not pursue the idea. Our client spent a few weeks of consulting fees but saved more than 90% of their budget by not building anything.

The client for this project provides software to a niche set of businesses. As more and more competition started popping up, they believed they saw an opportunity to create a digital marketplace in their niche. Before Carbon Five started building software, the client wanted us to confirm demand for the marketplace.

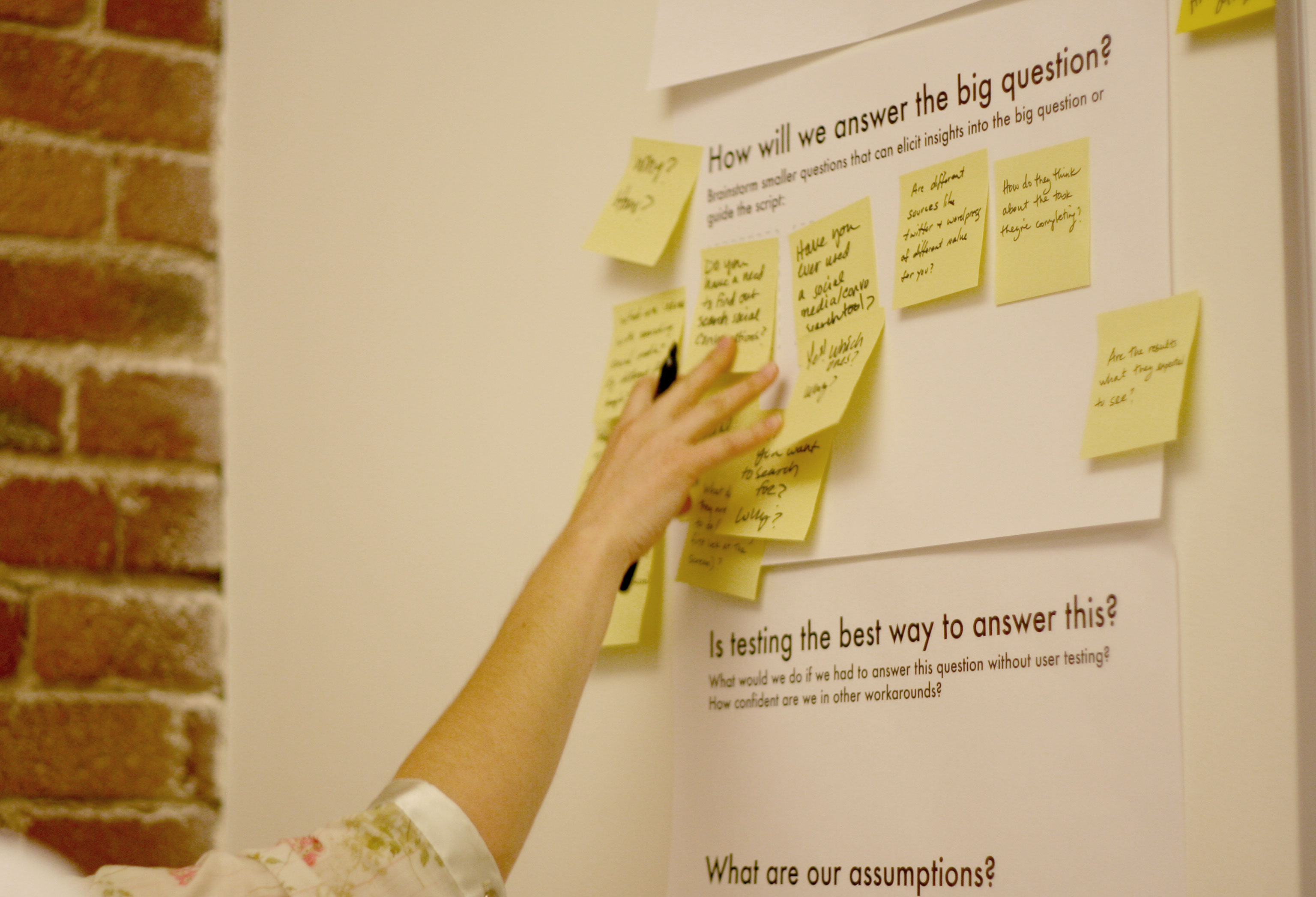

Over the course of a month, we set up 15 demand experiments that ran across Google Adwords, the client’s marketing website, and the client’s current software products. We supplemented our quantitative tests with empathy and problem interviews.

There are lots of great blog posts and books on how to properly conduct customer development, so I won’t go into our exact methods in this blog post. A few examples of good resources are a Carbon Five blog post series on user research and one of our favorite books, the Mom Test by Rob Fitzpatrick.

Two weeks into the engagement, our demand tests were not revealing positive results. The blog post we published had only half the click through rate we expected. Our A/B tested banner ads converted at roughly 20% of their controls. Other experiments came back with similar results. Furthermore, our interviews showed that customers did not perceive there to be a significant need for the product.

The client stakeholders were surprised. They had received positive feedback when casually talking to their customer about the idea. However, asking friendly customers if they would use a hypothetical new service will often give you different results than structured user research.

Despite the negative results starting to pile up, both the stakeholders and the Carbon Five team wanted more evidence before scrapping the product idea altogether. So, we spent the next two weeks running A/B tests with 10 different sets of copy. And to find out why the small group that did convert on our landing pages seemed to find it valuable, we reached out to them and conducted more interviews.

The results from this second set of experiments were in line with the results from our first. With relatively concrete results pointing to a lack of demand in the market, the senior stakeholders decided to declare victory and end the project. But rather than view the project as a wasted month, the organization was able to see the results as learnings, not failings. And in the end, everyone was glad we didn’t waste time, money, and brand capital building a marketplace that customers ultimately didn’t want.